I was attending a SQL Server 2016 launch event organized by PASS MN and the Minnesota BI User Group and I was listening to one of the Azure features with StretchDB. The claim was that it was very cost effective to have your archive data automagically shipped off to the cloud instead of residing on slow storage onsite. So the first question that came up in my mind is how cost efficient is it? If it is that compelling, then I need to know more! So that’s the topic of my blog post – storage cost for archived tables in Microsoft Azure.

There are several thoughts swimming around in my mind. If I need storage for old slow archive tables, I would prefer to have tiered storage. There are lots of ways to do this, but the major vendors have SANs you can buy that have 2-3 tiers of disk (SSD, Fast SCSI and Slow SCSI/SAS). If I had a big ol’ SAN that would place rarely used archive data in the lowest tier, then my storage would be optimized for me. Down to the multi-terabyte SAS disks you go! So if I had the capital for that type of hardware, I probably wouldn’t have too much angst about it. So would Azure compel me to move this data offsite? I’m thinking purely about cost.

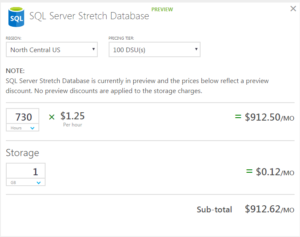

Let’s take a look at the cost for the Azure space. I am assuming I would set-up a North-Central StretchDB instance. Let’s say I want 1 GB of cold storage for the whole month. The storage is a nice cheap $0.025/Gig. But, per the documentation, you also have to pay compute costs. The lowest level shows the “100 DSU” rate of $1.25/hour. Also per the documentation, we pay for the hours the stretched database is online. So if I want this feature permanently on for the month, I’m at (30.42 days x 24 hours) x $1.25 = $912.60. Add on the paltry $0.025 and we still get a hefty bill. This assumes no data flows back to your data center. Outbound data is charged, too.

Screenshot of 1 GB of stretchDB costs for a month. Source from Microsoft here

So, from a cost perspective alone, you want to have a lot of data to ship off that is rarely accessed. That being said, I can get a lot of tier 3 storage space for my server at that price (5-10+ TB depending on the drive type). So, unless I had a niche situation, I would be more inclined to keep my data in-house until there was an explicit problem to solve. What are your thoughts on this? What would compel you to stretch your data to Azure?